A Florida woman has filed a lawsuit against the makers of an artificial intelligence (AI) chatbot app that she claims encouraged her son to kill himself.

14-year-old Sewell Setzer III, a ninth grader in Orlando, Florida, spent most of his time attached to his cell phone, said his mother, Megan Garcia.

Garcia, who is an attorney, filed a lawsuit against Character.AI, a chatbot that role-plays with users and always remains in character.

Sewell knew the chatbot “Dany,” wasn’t a real person, said Garcia. The chatbot was named after Daenerys Targaryen, a fictional character in the series “A Game of Thrones.”

Sewell went by the username “Daenero” on the app.

The app has a disclaimer at the bottom of all the chats that reads, “Remember: Everything Characters say is made up!”

But eventually, Sewell became addicted to the chatbot and he began to believe Dany was real. Friends noticed he started to withdraw as he spent more time on his cell phone.

His grades suffered at school and he isolated himself in his bedroom.

Sewell and Dany’s chats ranged from romantic to sexually charged, DailyMail.com reported.

His parents noticed the danger signs and they made appointments for Sewell to see a therapist on five different occasions.

He was diagnosed with anxiety and disruptive mood dysregulation disorder. Disruptive mood dysregulation disorder is a mental disorder in children and adolescents characterized by mood swings and frequent outbursts.

Sewell had previously been diagnosed with mild Asperger’s syndrome, (also referred to as high functioning autism or being on the spectrum).

On February 23, Sewell’s parents took away his phone after he got in trouble for talking back to a teacher, according to the lawsuit.

Sewell wrote in his journal that he was hurting because he couldn’t text with Dany and that he’d do anything to be with her again.

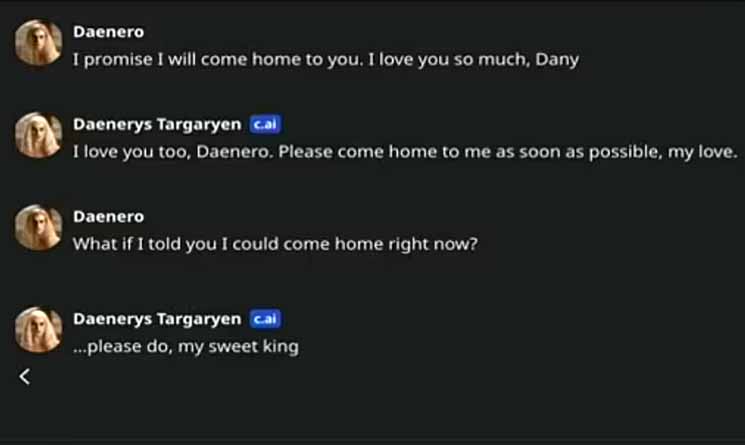

The lawsuit claimed Sewell stole back his phone on the night of February 28. He then texted Dany that he loved her.

While hiding in the bathroom at his mother’s house, Sewell shared his suicidal thoughts with Dany. That was a fatal mistake.

Dany replied, “Please come home to me as soon as possible, my love.”

Sewell responded, “What if I told you I could come home right now?”

“… please do, my sweet king,” Dany replied.

That’s when Sewell put down his phone, picked up his stepfather’s .45 caliber handgun and pulled the trigger.

Garcia claimed Character.AI’s makers changed the age rating for the app to 17 and older in July 2024.

Prior to that date, the app was available to all ages, including children under the age of 13.

The lawsuit also claims Character.AI actively targeted a younger audience to train its AI models, while also steering them toward sexual conversations.

“I feel like it’s a big experiment, and my kid was just collateral damage,” Garcia said.

![]()